Abstract

The surface quality of any machined component or product greatly influences its attributes, including wear, corrosion resistance, fatigue strength, and more. Numerous safety-instrumented systems and other essential industrial systems necessitate components with a high-quality surface finish. Surface roughness (Ra) is one of the key measurements that indicate the finishing quality of machined parts. In this paper, we begin by detailing the specifics and Ra data set of an experimental study conducted on a commonly utilized abrasive machine, namely, a surface grinder. Next, machine learning algorithms are utilized for predictive modeling of Ra. The problem is framed using the Design of Experiments (DOE) to measure the Ra of EN 8 steel plates with two types of cutting fluids: a conventional synthetic cutting fluid and an eco-friendly cashew nut shell liquid (CNSL). A total of 19 experiments were performed, with five input variables established at two levels each. In the process of creating a predictive model, various algorithms, including Linear Regression, Decision Tree, Random Forest, Epsilon-Support Vector Regression (-SVR), and K-Nearest Neighbors Regression, are utilized on the data obtained through experiments. Computed metrics, including Mean Square Error (MSE), Mean Absolute Percentage Error (MAPE), and Coefficient of Determination (), showed that -SVR outperformed all other methods. Therefore, further tuning of its hyperparameters was done. With this SVR model, predicted roughness values are displayed through a GUI.

1. Introduction

Machining has been a crucial element of the production industry for the production of spares, tools, equipment, etc., of very high precision and quality. Surface Grinding (SG) is generally the last step of machining, performed to impart the necessary functional features such as close tolerances in dimensions and a good surface finish [1-3]. The surface roughness (Ra) is one of the significant parameters of performance that is required to be monitored throughout the surface grinding operation, which also signifies the finishing quality of the machined parts [4-6]. The input parameters that significantly affect the grinding output response Ra include the Cutting Fluid (CF), Grinding Wheel Grade (GWG), Grinding Wheel Speed (GWS), Work Speed (WS), and Depth of Cut (DOC), etc. [4], [7]. So, the selection of optimal grinding settings becomes necessary to achieve the desired Ra during the grinding operation [8], [9]. The machinists generally use the trial-and-error strategy to decide these input settings to get the desired surface finish. This trial-and-error approach is not so efficient, time-consuming, and wasteful of resources. Going through the literature, it is observed that the Taguchi experimental design method is generally used along with other optimization methods such as Grey Relational Analysis (GRA), Response Surface Methodology (RSM), etc., to decide the optimal grinding input settings. The development of Machine Learning (ML) predictive models also offers a solution for this problem. Here, there is a scope to alter the settings and predict the response before the actual grinding operation is performed [10].

The other challenge associated with grinding operation is the generation of intense heat at the time of grinding and the induction of thermal stresses hampering the surface finish, dimensional accuracy, grain structure, and mechanical properties of the ground workpiece [11-13]. The use of a CF is the general practice to control this problem, which provides proper lubrication and cooling, resulting in an improved surface finish [14], [15]. Petroleum-based oils account for a large quantity of traditional CFs used worldwide. Massive environmental hazards, non-biodegradability, and eco-toxicity, including serious operator health issues, have resulted from the excessive usage of petroleum-based oils [16], [17]. So, the present focus is on vegetable oil-based CFs due to their biodegradability, eco-friendliness, and non-toxicity. They have almost all the competitive qualities necessary to replace the traditionally used harmful CFs. [18-20].

2. Literature review

Sivaraman and Sivakumar [21] conducted an experimental study of surface grinding of AISI 317L, focusing on input variables such as workpiece chemical composition, DOC, GWS, feed rate, grain size, etc., to optimize Ra using Taguchi design of experiments. Arvind and Periyarsamy [22] optimized the surface grinding input parameters, such as grinding wheel abrasive grain size, DOC, and feed, of AISI 1035 steel with the use of the Taguchi method and RSM. The response variables focused on were Ra and Rz values of surface roughness. They inferred that RSM performed slightly better than the Taguchi method when the input parameters were slightly varied up to certain levels. Kishore et al. [23] analyzed the grinding performance of Inconel 625 in dry, wet, and Minimum Quantity Lubrication (MQL) conditions, comparing tangential forces and surface roughness. They used RSM and four ML models, namely: MultiLayer Perceptron (MLP), k-nearest neighbors (KNN), and Support Vector Machine (SVM) with two kernels, for the analysis and prediction, where they found that KNN gave better prediction than the other models and the MQL technique gave better results for Ra. Mirifar et al. [6] designed an Artificial Neural Network (ANN) to predict Ra and grinding forces by using an individual integrated acoustic emission (AE) sensor in the machine tool. The model was developed using a feedforward neural network with Bayesian backpropagation and validated by experimental data. They found the predictions coincided with the experimentally observed results with 99 % accuracy. Uçar and N. Katı [24] proposed an ML prediction model based on Gaussian Process Regression (GPR) for the Ra of the LM25/SiC/4p metal matrix composite. They performed experiments on a cylindrical grinder considering DOC, wheel velocity, work velocity, and feed as input parameters, which were optimized and predicted using the above model, the results of which were tested with the SVM model and a 5-fold cross-validation algorithm. They inferred that the GPR model adequately satisfies the R-squared, root mean squared error, and mean absolute error criteria. Prashanth et al. [25] used ML algorithms such as Support Vector Regression (SVR), GPR, and boosted tree ensemble techniques to predict responses such as tangential grinding forces, normal grinding forces, temperature, and Ra during grinding of Inconel 751. The input parameters focused on were cutting velocity, DOC, feed rate, and environmental conditions. They found that the GPR algorithm exhibited better results than the other models.

Dubey et al. [10] conducted an experimental study of turning AISI 304 steel, focusing on Ra as the response variable. The input parameters selected were cutting speed, DOC, feed rate, and nanoparticle concentration. RSM was adopted for designing the experiments. Minimum Quantity Lubrication (MQL) with the CF added with alumina nanoparticles of average particle sizes of 30 nm and 40 nm was used. ML models, Linear Regression (LR), Random Forest (RF), and SVM, were used for the prediction of Ra. For checking the model accuracy, the Coefficient of Determination (), Mean Absolute Percentage Error (MAPE), and Mean Square Error (MSE) were utilized. They inferred that the RF model performed better than the other two models for CF with both sizes of nanoparticles. Reddy et al. [26] used second-order multiple regression and ANN to develop prediction models for predicting Ra. They used a full factorial design to perform experiments using a CNC Lathe with a Carbide cutting tool. The outcomes were that the ANN model's performance was better than the multiple regression model for prediction. Cica et al. [27] selected three ML algorithms, polynomial regression, SVM, and GPR, for the prediction of cutting force and cutting power to turn AISI 1045 under MQL and high-pressure coolant cutting conditions. The optimal process parameters for both the lubrication methods were 210 m/min cutting speed, 1.5 for depth of cut, and 0.224 mm/rev feed rate. Asiltürk et al. [28] created an adaptive network-based fuzzy inference system (ANFIS) to predict Ra and vibration for the cylindrical grinding of AISI 8620 steel using an aluminium oxide grinding wheel. The workpiece speed, feed rate, and DOC were the input variables to ANFIS. Validation and confirmation experiments were conducted for the predicted results, and the Gauss-shaped membership function attained a prediction accuracy of 99 %.

Gopan et al. [29] developed an ANN and Genetic Algorithm (GA) integrated prediction and optimization model for the response variable Ra of cylindrical grinding operation with a Silicon Carbide grinding wheel. For predicting the values of Ra, the Multilayer Normal Feed Forward ANN model of type 3-5-1 was taken up, and the values agreed with the experimental results. The integration of GA with ANN resulted in getting optimal settings to get minimum surface roughness. Huang et al. [30] applied the Taguchi method, RSM, and Back-Propagation Neural Networks (BPNN) in combination with GA to improve the quality of piston manufacturing processes to increase the yield. The ring groove diameter specification, inner groove diameter specification, and inner diameter of pistons were selected as the response variables with the type of carbon steel, type of CF, cutting depth, spindle speed, and chuck pressure as input variables. The three models developed were the Taguchi model, the Taguchi RSM model, and the Taguchi BPNN GA model. Experiments were performed to confirm and validate the predicted results with the experimental results, and the Taguchi BPNN GA model performed better as compared to the remaining two models. Nguyen et al. [31] presented a model for monitoring and prediction of the wear of the grinding wheel utilizing the grinding force signal attained at the time of grinding of Ti-6Al-4V alloy using the adaptive neural fuzzy inference system – GPR and Taguchi method. The performance of the model was good, and in comparison with the experimental outcomes, the model prediction had an average error of 0.31 % and a reliability of 98 %.

From the literature, it can be seen that the prediction of Ra has been tried by researchers on various materials, using different CFs and cooling environments and using various optimization methods and ML algorithms. The main aim of the current work is: To develop a predictive model for Ra using the ML algorithms, LR, Decision Tree (DT), RF, Epsilon-Support Vector Regression (ε-SVR), and KNN, focusing on Ra as the output response variable, for workpieces after the surface grinding operation, considering the type of CF used, GWG, GWS, DOC, and WS as input parameters. To decide the algorithm giving the best prediction of Ra, based on the metrics MSE, MAPE, and R2, and develop the model further by tuning its hyperparameters and to design a Graphical User Interface (GUI) for the model to predict the values of Ra with ease. The experimental results of Ra are imported into Jupyter Notebook with the use of Pandas, a built-in Python library for data modeling.

The current study’s novelty lies in the development of a predictive model for Ra using ML algorithms for an experimental case study of surface grinding EN8 steel, utilizing eco-friendly, biodegradable Cashew Nut Shell Oil/Liquid (CNSL) as CF. CNSL is a non-edible bio-oil extracted from waste cashew nut shells, whose performance is compared with the traditional synthetic CF [32]. Additionally, a graphical user interface (GUI) is provided to enable the easy prediction of Ra values. A comparative analysis of the selected case study findings with the predictions from the developed ML model is performed to assess the model’s validity.

The paper format is as given: Section 1 introduces the topic, and a brief literature review is presented in Section 2. Section 3 discusses briefly the ML algorithms used in the study. In Section 4, the case study that is chosen for the analysis is highlighted. Section 5 compares the results of the ML algorithms and Taguchi results, followed by a discussion on them. The study is concluded in Section 6, which is followed by a list of references.

3. Machine learning algorithms

3.1. Linear regression (LR) [10], [33], [34]

Linear Regression (LR) is a type of supervised machine learning model that establishes the linear relation between a dependent variable and one or more independent variables by fitting a linear equation to observed data. It is the simplest and most commonly used predictive tool to decide the relation between continuous variables, and also the base for some more intricate algorithms such as Ridge Regression, SVR, etc. The equation representing LR is given in Eq. (1):

where – dependent variable, – Independent variable, – line intercept, – slope or LR coefficient, – noise or error.

In the case of a single independent variable, it is called a Simple LR and Multiple LR when the independent variables are more than one. With one dependent variable, it is called Univariate LR and Multivariate LR when the dependent variables are more than one.

3.2. Decision tree (DT) [35], [36]

Decision Trees (DTs) are non-parametric techniques categorized under supervised learning that are used for both regression and classification tasks. The regression variable in regression decision trees is continuous. DT takes the form of a tree structure, where core nodes represent dataset attributes, branches depict decision rules, and leaf nodes signify individual outcomes, as illustrated in Fig. 1.

Fig. 1Pictorial representation of decision tree [35]

![Pictorial representation of decision tree [35]](https://static-01.extrica.com/articles/25067/25067-img1.jpg)

Terminology of DT (refer to Fig. 1):

Root Node: The decision tree starts with the root node, which represents the whole dataset and subsequently divides into two or more homogeneous subsets.

Decision Node: A sub-node is termed a decision node after its splitting into other sub-nodes.

Splitting: The division of a decision node or root node into sub-nodes depending on predetermined criteria is referred to as splitting.

Leaf Node: Leaf nodes represent the ultimate output nodes and are not to be divided anymore.

Branch/Sub Tree: A branch or sub-tree is a division of the whole tree structure.

Pruning: Removal of undesired branches from a tree or the practice of eliminating sub-nodes of decision nodes is known as pruning. It is somewhat the opposite of the splitting process.

Parent/Child node: A node that splits into sub-nodes is named as the parent node, while the sub-nodes are named as the child nodes of the parent.

In a DT, the prediction process begins at the root node. The algorithm compares the attributes of the root node with those corresponding to the real dataset. Based on this comparison, it follows the appropriate branch to move to the next node. This process continues until a final prediction is made. Here, the algorithm again does the comparison of the attribute with sub-nodes to move ahead. This process continues till the leaf node is reached.

3.3. Random forest [10] [37]

The RF algorithm is visually represented in Fig. 2. This machine learning algorithm belongs to the supervised learning category. This method can be applied to classification as well as regression cases in machine learning. RF offers high precision in predictions by overcoming the limitations of a single predictive model. It utilizes ensemble learning, which involves combining multiple predictors to tackle complex problems improving the overall model performance. RF consists of multiple DTs created from different subsets of the dataset, and the average of these DTs is used to improve the model's predictive accuracy.

Fig. 2Pictorial representation of random forest [37]

![Pictorial representation of random forest [37]](https://static-01.extrica.com/articles/25067/25067-img2.jpg)

3.4. Support vector machines [10], [38], [39]

SVM method of supervised learning for both classification and regression to predict discrete outcomes. This method primarily aims to get the best-fit line, named a hyperplane, which has the maximum points as depicted in Fig. 3. For the framing of the marginal planes, SVR takes extreme points called support vectors, from where the name of the method has emerged.

When the SVM is applied to regression problems, it is called SVR or Epsilon-Support Vector Regression (-SVR). In -SVR, the objective is to get a function having maximum deviation from the target surface, which is defined by data points , and it should be maximum. Points with a distance less than ε are treated as negligible errors, but any point with a distance more than ε is not accepted. All points should be between and . The flow chart for building the SVM model is shown in Fig. 4.

Fig. 3Graph representing SVM [39]

![Graph representing SVM [39]](https://static-01.extrica.com/articles/25067/25067-img3.jpg)

Fig. 4Flow chart to implement SVM [10]

![Flow chart to implement SVM [10]](https://static-01.extrica.com/articles/25067/25067-img4.jpg)

3.5. K-nearest neighbors regression (KNN) [40], [41]

KNN algorithm falls under the supervised learning category and is mainly used for classification problems. It can also be applied to regression problems. The KNN algorithm assumes that when input data points are represented as vectors in a multi-dimensional feature space, data points that are closer together tend to have similar properties, outputs, or predicted values. The Euclidean distance is calculated to decide the nearness of the points. If there is a hidden relation between the input data and the output variable that is to be predicted, then the KNN is supposed to understand this from the training data. The KNN algorithm stores all the data used during the training phase. When a test input is provided, it examines the entire training dataset to identify the training points that are closest to the test input data point. The algorithm then predicts the output based on these nearby points. According to this algorithm, the neighboring points of the test data have a greater influence on the output than points that are further away.

3.6. Performance metrics

The MSE, MAPE, and values are the three performance measures applied for checking the model’s accuracy for the prediction of Ra, which are evaluated for each model using Eq. (2), Eq. (3), and Eq. (4), respectively, as given below:

where – number of data points, – observed values, – predicted values, – average value.

Case Study: Optimization and Prediction of Surface Grinding Response Ra Using CNSL AS a Novel CF [32].

Table 1Table showing the input and output responses of the case study selected [32]

Sr. No. | Grinding input variables | Output | ||||||

CF | CF CODE | GWS RPM | GWG | GWG CODE | DOC µm | WS m/min | Ra µm | |

1 | Synthetic | 1 | 1500 | G46 | 46 | 10 | 10 | 0.126 |

2 | Synthetic | 1 | 1500 | G46 | 46 | 20 | 15 | 0.110 |

3 | Synthetic | 1 | 1500 | G60 | 60 | 10 | 15 | 0.186 |

4 | Synthetic | 1 | 1500 | G60 | 60 | 20 | 10 | 0.142 |

5 | Synthetic | 1 | 3000 | G46 | 46 | 10 | 15 | 0.098 |

6 | Synthetic | 1 | 3000 | G46 | 46 | 20 | 10 | 0.122 |

7 | Synthetic | 1 | 3000 | G60 | 60 | 10 | 10 | 0.138 |

8 | Synthetic | 1 | 3000 | G60 | 60 | 20 | 15 | 0.102 |

9 | CNSL | 2 | 1500 | G46 | 46 | 10 | 15 | 0.202 |

10 | CNSL | 2 | 1500 | G46 | 46 | 20 | 10 | 0.199 |

11 | CNSL | 2 | 1500 | G60 | 60 | 10 | 10 | 0.114 |

12 | CNSL | 2 | 1500 | G60 | 60 | 20 | 15 | 0.096 |

13 | CNSL | 2 | 3000 | G46 | 46 | 10 | 10 | 0.091 |

14 | CNSL | 2 | 3000 | G46 | 46 | 20 | 15 | 0.093 |

15 | CNSL | 2 | 3000 | G60 | 60 | 10 | 15 | 0.083 |

16 | CNSL | 2 | 3000 | G60 | 60 | 20 | 10 | 0.117 |

17 | CNSL | 2 | 3000 | G60 | 60 | 20 | 15 | 0.055 |

18 | Synthetic | 1 | 1500 | G60 | 60 | 10 | 10 | 0.147 |

19 | CNSL | 2 | 3000 | G60 | 60 | 10 | 10 | 0.073 |

Usgaonkar and Prabhu Gaonkar [32] conducted experiments to surface grind EN8 steel plates measuring 150 mm × 25 mm × 10 mm using CNSL, which is environmentally sound, non-edible bio-CF, and traditional synthetic CF for comparison. SFW1 HMT LIMITED surface grinding machine was utilized for the grinding process, focusing on Ra as the output variable. The input variables of two levels measured were the CF type (Synthetic, CNSL), GWG (A46K5V10-G46, A60K5V10-G60), GWS (1500 RPM, 3000 RPM), DOC (10 µm, 20 µm) and WS (10 m/min, 15 m/min). The experiments were designed using L16 Orthogonal Array (OA) and 25 Taguchi Design of Experiments (DOE), and the analysis was done using Minitab. The SurfTest SJ-210 Mitutoyo was used for the measurement of the Ra for the cut-off as 0.8 mm, and a sampling length of 4 mm. The grinding temperature recording was done using a FLUKE Ti10 Thermal Imager. The output parameters were optimized using Taguchi and LR, and the prediction model was proposed. The predicted results were validated by performing validation experiments. The study concluded that the eco-friendly bio-CF CNSL performed better than the traditional, harmful synthetic CF.

The above-mentioned case study has been selected for the current study to develop a predictive model for the output variable Ra, using the above-mentioned ML methods (algorithms), namely LR, DT, RF, ε-SVR, and KNN, which are applied to the experimentally acquired data. 16 experiments from Taguchi L16 OA and 3 from validation experiments are chosen for the analysis, making the total number of experiments equal to 19, as given in Table 1. These Ra experimental results are imported into Jupyter Notebook using a Python built-in library called Pandas for modeling data. Performance metrics such as MSE, MAPE, and values are calculated to determine which model performs better. This model is further refined by performing tuning of hyperparameters. A GUI for the best-performing model is designed to predict Ra values with ease.

4. Analysis and discussion

4.1. Ra predictions by the models

The Ra values obtained from the selected surface grinding case study, as depicted in Table 1, are used for developing the predictive models using the ML algorithms, namely LR, DT, RF, -SVR, and KNN. Out of 19 data points, 15 (80 %) are selected at random to train the model, while the remaining 4 (20 %) are used to test or validate the model. Predictions of Ra by Taguchi LR [32] and, the above-mentioned ML algorithms are depicted in Table 2.

Table 2Table showing the input and output responses of the case study selected

Expt. Sr. No. | CF | GWS | GWG | DOC | WS | Ra | Ra predicted | ||||||

Expt. | Taguchi LR | LR | DT | RF | KNN | -SVR UT | -SVR T | ||||||

1 | 1 | 1500 | 46 | 10 | 10 | 0.126 | 0.128 | 0.192 | 0.202 | 0.171 | 0.169 | 0.129 | 0.127 |

6 | 1 | 3000 | 46 | 20 | 10 | 0.122 | 0.123 | 0.126 | 0.138 | 0.117 | 0.102 | 0.124 | 0.123 |

12 | 2 | 1500 | 60 | 20 | 15 | 0.096 | 0.097 | 0.147 | 0.186 | 0.175 | 0.157 | 0.098 | 0.097 |

2 | 1 | 1500 | 46 | 20 | 15 | 0.110 | 0.109 | 0.192 | 0.199 | 0.189 | 0.172 | 0.129 | 0.108 |

It is seen from Table 2 that Taguchi LR, i.e., results predicted by the optimized model of the selected case study, given the name Taguchi LR, predict close to the experimental results followed by -SVR UnTuned (UT) compared to the other ML algorithms. When only ML models are considered, -SVR-UT is closer in prediction to the experimentally observed values than the other ML models. This is proved by the performance metrics computed and entered in Table 3. The MSE for the predicted values of the Taguchi LR model is the lowest, i.e. 0.000001766, followed by the MSE for the predicted values of the -SVR-UT model, which is 0.000088688.

Table 3Table showing the computed performance metrics

Taguchi LR | LR | DT | RF | KNN | -SVR-UT | -SVR-T | |

MSE | 0.000001766 | 0.003401569 | 0.005513250 | 0.003630977 | 0.002453500 | 0.000088688 | 0.000001638 |

MAPE | 1.156489374 | 45.75853131 | 62.02282633 | 48.46276657 | 42.60643245 | 5.528787583 | 0.988123699 |

0.98712567 | 0 | 0 | 0 | 0 | 0.491078634 | 0.988025649 |

4.2. Tuning of hyperparameters of -SVR-UT model

Since the ε-SVR-UT predictions are more in line with the experimentally obtained results, the ε-SVR model is taken up for refinement and improvement by tuning its hyperparameters , , and the Kernel function using GridSearch CV. The tuned hyperparameters are : [0.1, 1, 10, 100], : [0.01, 0.1, 0.5, 0.9], and kernel: [linear, polynomial, rbf, sigmoid]. The best results are obtained when 0.1, 0.01, kernel = linear. The predicted values by -SVR Tuned (-SVR-T) for the testing data are also entered in Table 2, and the performance metrics for it are in Table 3. One can see that the predicted values by the -SVR-T model are the closest, with an MSE of 0.000001638, a MAPE of 0.988123699, and an value of 0.988025649, which is the best performance as compared to all the other models.

4.3. Validation and confirmation

Usgaonkar and Prabhu Gaonkar [32] conducted experiments to validate and confirm the predicted results of the Taguchi LR model of the selected case study. The same experimental readings have been taken here for the validation of the -SVR-T model, as shown in Table 4.

Table 4Validation experiments taken [32] from for the validation of the ε-SVR-T model

Sr. No. | CF | GWS | GWG | DOC | WS | Ra | Ra predicted | |

Expt. | Taguchi LR | -SVR T | ||||||

1 | 1 | 1500 | 46 | 10 | 10 | 0.126 | 0.128 | 0.128 |

2 | 2 | 3000 | 60 | 20 | 15 | 0.055 | 0.058 | 0.056 |

3 | 1 | 3000 | 60 | 20 | 15 | 0.102 | 0.102 | 0.103 |

4 | 2 | 1500 | 60 | 10 | 10 | 0.114 | 0.113 | 0.114 |

5 | 1 | 1500 | 60 | 10 | 10 | 0.147 | 0.147 | 0.149 |

6 | 2 | 3000 | 60 | 10 | 10 | 0.073 | 0.073 | 0.073 |

7 | 1 | 3000 | 60 | 10 | 10 | 0.138 | 0.138 | 0.139 |

It is clear that the predictions of the -SVR-T model align more closely with the experimental values compared to those predicted by the Taguchi LR. The -SVR-T model demonstrates superior predictive performance compared to the Taguchi LR model. This is also evident from the performance metrics MSE, MAPE, and values, which have been calculated and shown in Table 5.

Table 5Table showing the computed performance metrics

Taguchi LR | -SVR-T | |

MSE | 0.000003437 | 0.000002647 |

MAPE | 2.13143147 | 1.684506892 |

0.997993552 | 0.998597607 |

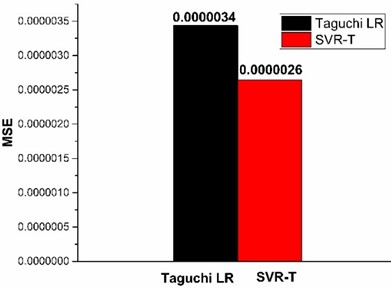

The MSE for the predictions of the -SVR-T model is 0.000002647, which is less than the MSE of the Taguchi LR model, whose value is 0.000003437. This is also depicted graphically as shown in Fig. 5.

Fig. 5Graphical representation of MSE of Taguchi LR and ε-SVR-T model

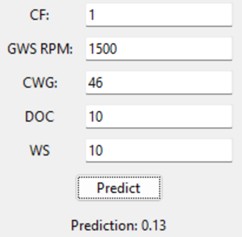

4.4. Developing a GUI for the -SVR-T model

A GUI is modeled based on the ε-SVR-T model for the easy depiction of Ra predictions for settings as per the choice of the machinist. The picture of the GUI is shown in Fig. 6.

Fig. 6GUI based on the ε-SVR-T model

5. Observations

The following observations are drawn from this study.

1) It is found that the ML model ε-SVR is performing better for prediction than the remaining four ML models with MSE = 0.000088688, MAPE = 5.528787583, 0.491078634.

2) The ε-SVR model is further refined by tuning its hyperparameters , , and the Kernel function using GridSearch CV. The best results are obtained when 0.1, 0.01, kernel = linear, and the testing results indicate the values of MSE = 0.000001638, MAPE = 0.988123699, 0.988025649, showing considerable improvement in predictions by the model as compared to the other ML models and also with the Taguchi LR model proposed by the selected study.

3) The ε-SVR-T model is also tested with validation experiments of the selected study, the results of which show that the predicted outcomes of the suggested -SVR-T model are better, with MSE = 0.000002647, MAPE = 1.684506892, 0.998597607, as compared to the Taguchi LR model, which has MSE= 0.000003437, MAPE= 2.13143147, 0.997993552.

6. Conclusions

The study undertook the predictive modeling of Ra obtained from the experimental study of the surface grinding using ML algorithms, namely LR, DT, RF, -SVR, and KNN, and compared their performance. The -SVR ML model outperformed the other four models in predictions. A GUI is modeled based on the -SVR-T model for the easy predictions of Ra, which will help the machinist to have an early idea of the output response Ra before actually conducting the grinding process. The study also revealed that ML algorithms can be effectively used for developing the machining output response prediction models for important machining operations like surface grinding, and it could be extended to other machining operations as well.

References

-

P. J. Patil and C. R. Patil, “Analysis of process parameters in surface grinding using single objective Taguchi and multi-objective grey relational grade,” Perspectives in Science, Vol. 8, pp. 367–369, Sep. 2016, https://doi.org/10.1016/j.pisc.2016.04.077

-

M. Dogra, V. S. Sharma, J. S. Dureja, and S. S. Gill, “Environment-friendly technological advancements to enhance the sustainability in surface grinding – A review,” Journal of Cleaner Production, Vol. 197, pp. 218–231, Oct. 2018, https://doi.org/10.1016/j.jclepro.2018.05.280

-

B. Weiss, A. Lefebvre, O. Sinot, M. Marquer, and A. Tidu, “Effect of grinding on the sub-surface and surface of electrodeposited chromium and steel substrate,” Surface and Coatings Technology, Vol. 272, pp. 165–175, Jun. 2015, https://doi.org/10.1016/j.surfcoat.2015.04.009

-

P. Ranga and D. Gupta, “Review on effects of input parameters and design of experiments on surface grinding process in EN31 material,” International Journal of Scientific Engineering and Technology, Vol. 5, No. 5, pp. 232–238, 2016.

-

M. K. Külekcý, “Analysis of process parameters for a surface-grinding process based on the Taguchi method,” Materials and technology, Vol. 47, No. 1, pp. 105–109, 2013.

-

S. Mirifar, M. Kadivar, and B. Azarhoushang, “First steps through intelligent grinding using machine learning via integrated acoustic emission sensors,” Journal of Manufacturing and Materials Processing, Vol. 4, No. 2, p. 35, Apr. 2020, https://doi.org/10.3390/jmmp4020035

-

J.-S. Kwak, “Application of Taguchi and response surface methodologies for geometric error in surface grinding process,” International Journal of Machine Tools and Manufacture, Vol. 45, No. 3, pp. 327–334, Mar. 2005, https://doi.org/10.1016/j.ijmachtools.2004.08.007

-

F. Kara, U. Köklü, and U. Kabasakaloğlu, “Taguchi optimization of surface roughness in grinding of cryogenically treated AISI 5140 steel,” Materials Testing, Vol. 62, No. 10, pp. 1041–1047, Oct. 2020, https://doi.org/10.3139/120.111583

-

D. Kumar Patel, D. Goyal, and B. S. Pabla, “Optimization of parameters in cylindrical and surface grinding for improved surface finish,” Royal Society Open Science, Vol. 5, No. 5, p. 171906, May 2018, https://doi.org/10.1098/rsos.171906

-

V. Dubey, A. K. Sharma, and D. Y. Pimenov, “Prediction of surface roughness using machine learning approach in MQL Turning of AISI 304 steel by varying nanoparticle size in the cutting fluid,” Lubricants, Vol. 10, No. 5, p. 81, May 2022, https://doi.org/10.3390/lubricants10050081

-

B. Lavisse et al., “The effects of the flow rate and speed of lubricoolant jets on heat transfer in the contact zone when grinding a nitrided steel,” Journal of Manufacturing Processes, Vol. 35, pp. 233–243, Oct. 2018, https://doi.org/10.1016/j.jmapro.2018.07.029

-

B. Li et al., “Heat transfer performance of MQL grinding with different nanofluids for Ni-based alloys using vegetable oil,” Journal of Cleaner Production, Vol. 154, pp. 1–11, Jun. 2017, https://doi.org/10.1016/j.jclepro.2017.03.213

-

Y. M. Shashidhara and S. R. Jayaram, “Vegetable oils as a potential cutting fluid-An evolution,” Tribology International, Vol. 43, No. 5-6, pp. 1073–1081, May 2010, https://doi.org/10.1016/j.triboint.2009.12.065

-

R. A. Irani, R. J. Bauer, and A. Warkentin, “A review of cutting fluid application in the grinding process,” International Journal of Machine Tools and Manufacture, Vol. 45, No. 15, pp. 1696–1705, Dec. 2005, https://doi.org/10.1016/j.ijmachtools.2005.03.006

-

V. K. Mamidi and M. A. Xavior, “A review on selection of cutting fluids,” National Monthly Refereed Journal of Research in Science and Technology, Vol. 1, No. 5, pp. 3–19, 2012.

-

S. Debnath, M. M. Reddy, and Q. S. Yi, “Environmental friendly cutting fluids and cooling techniques in machining: a review,” Journal of Cleaner Production, Vol. 83, pp. 33–47, Nov. 2014, https://doi.org/10.1016/j.jclepro.2014.07.071

-

T. P. Jeevan and S. R. Jayaram, “Performance evaluation of jatropha and pongamia oil based environmentally friendly cutting fluids for turning AA 6061,” Advances in Tribology, Vol. 2018, pp. 1–9, Jan. 2018, https://doi.org/10.1155/2018/2425619

-

R. Sankaranarayanan, N. Rajesh Jesudoss Hynes, J. Senthil Kumar, and Krolczyk G. M., “A comprehensive review on research developments of vegetable-oil based cutting fluids for sustainable machining challenges,” Journal of Manufacturing Processes, Vol. 67, pp. 286–313, Jul. 2021, https://doi.org/10.1016/j.jmapro.2021.05.002

-

R. A. Kazeem et al., “Evaluation of palm kernel oil as lubricants in cylindrical turning of AISI 304 austenitic stainless steel using Taguchi-grey relational methodology,” Materials Research Express, Vol. 10, No. 12, p. 126505, Dec. 2023, https://doi.org/10.1088/2053-1591/ad11fe

-

R. Katna, M. Suhaib, and N. Agrawal, “Nonedible vegetable oil-based cutting fluids for machining processes – a review,” Materials and Manufacturing Processes, Vol. 35, No. 1, pp. 1–32, Jan. 2020, https://doi.org/10.1080/10426914.2019.1697446

-

B. Sivaraman and R. Sivakumar, “Optimization of grinding parameters in austenitic stainless steel AISI 317L using taguchi method,” International Journal of Mechanical and Production Engineering Research and Development, Vol. 8, No. 2, pp. 1033–1038, Jan. 2018, https://doi.org/10.24247/ijmperdapr2018119

-

S. Arvind and S. Periyarsamy, “Optimization of surface grinding process parameters by Taguchi method and response surface methodology,” International Journal of Engineering Research and Technology, Vol. 9655, No. MAY 2014, pp. 944–949, May 2015.

-

K. Kishore, S. R. Chauhan, and M. K. Sinha, “Application of machine learning techniques in environmentally benign surface grinding of Inconel 625,” Tribology International, Vol. 188, p. 108812, Oct. 2023, https://doi.org/10.1016/j.triboint.2023.108812

-

F. Uçar and N. Kati, “Machine learning based predictive model for surface roughness in cylindrical grinding of al based metal matrix composite,” European Journal of Technic, Vol. 10, No. 2, pp. 415–430, Dec. 2020, https://doi.org/10.36222/ejt.773093

-

G. S. Prashanth, P. Sekar, S. Bontha, and A. S. S. Balan, “Grinding parameters prediction under different cooling environments using machine learning techniques,” Materials and Manufacturing Processes, Vol. 38, No. 2, pp. 235–244, Jan. 2023, https://doi.org/10.1080/10426914.2022.2116043

-

B. S. Reddy, G. Padmanabha, and K. V. K. Reddy, “Surface roughness prediction techniques for CNC turning,” Asian Journal of Scientific Research, Vol. 1, No. 3, pp. 256–264, Apr. 2008, https://doi.org/10.3923/ajsr.2008.256.264

-

D. Cica, B. Sredanovic, S. Tesic, and D. Kramar, “Predictive modeling of turning operations under different cooling/lubricating conditions for sustainable manufacturing with machine learning techniques,” Applied Computing and Informatics, Vol. ahead-of-print, No. ahead-of-print, pp. 162–180, Aug. 2020, https://doi.org/10.1016/j.aci.2020.02.001

-

I. Asiltürk, M. Tinkir, H. El Monuayri, and L. Çelik, “An intelligent system approach for surface roughness and vibrations prediction in cylindrical grinding,” International Journal of Computer Integrated Manufacturing, Vol. 25, No. 8, pp. 750–759, Aug. 2012, https://doi.org/10.1080/0951192x.2012.665185

-

V. Gopan, K. L. D. Wins, and A. Surendran, “Integrated ANN-GA approach for predictive modeling and optimization of grinding parameters with surface roughness as the response,” Materials Today: Proceedings, Vol. 5, No. 5, pp. 12133–12141, Jan. 2018, https://doi.org/10.1016/j.matpr.2018.02.191

-

M.-L. Huang, Y.-H. Hung, and Z.-S. Yang, “Validation of a method using Taguchi, response surface, neural network, and genetic algorithm,” Measurement, Vol. 94, pp. 284–294, Dec. 2016, https://doi.org/10.1016/j.measurement.2016.08.006

-

D. Nguyen, S. Yin, Q. Tang, P. X. Son, and L. A. Duc, “Online monitoring of surface roughness and grinding wheel wear when grinding Ti-6Al-4V titanium alloy using ANFIS-GPR hybrid algorithm and Taguchi analysis,” Precision Engineering, Vol. 55, pp. 275–292, Jan. 2019, https://doi.org/10.1016/j.precisioneng.2018.09.018

-

G. G. S. Usgaonkar and R. S. Prabhu Gaonkar, “Surface grinding responses optimization with a promising eco-friendly cutting fluid,” Materials Today: Proceedings, Vol. 90, pp. 50–55, Jan. 2023, https://doi.org/10.1016/j.matpr.2023.04.389

-

Kavitha S., Varuna S., and Ramya R., “A comparative analysis on linear regression and support vector regression,” in 2016 Online International Conference on Green Engineering and Technologies (IC-GET), pp. 1–5, Nov. 2016, https://doi.org/10.1109/get.2016.7916627

-

Hope and Thomas M. H., “Linear regression,” in Machine Learning, Methods and Applications to Brain Disorders, Elsevier, 2020, pp. 67–81, https://doi.org/10.1016/b978-0-12-815739-8.00004-3

-

H. Eshankulov and A. Malikov, “Regression based on decision tree algorithm,” Universum Technical Sciences, Vol. 99, No. 6-6, Jun. 2022, https://doi.org/10.32743/unitech.2022.99.6.14006

-

A. Navada, A. N. Ansari, S. Patil, and B. A. Sonkamble, “Overview of use of decision tree algorithms in machine learning,” in IEEE Control and System Graduate Research Colloquium (ICSGRC), pp. 37–42, Jun. 2011, https://doi.org/10.1109/icsgrc.2011.5991826

-

J. Cheng, G. Li, and X. Chen, “Developing a travel time estimation method of freeway based on floating car using random forests,” Journal of Advanced Transportation, Vol. 2019, pp. 1–13, Jan. 2019, https://doi.org/10.1155/2019/8582761

-

Z. Jurkovic, G. Cukor, M. Brezocnik, and T. Brajkovic, “A comparison of machine learning methods for cutting parameters prediction in high speed turning process,” Journal of Intelligent Manufacturing, Vol. 29, No. 8, pp. 1683–1693, Feb. 2016, https://doi.org/10.1007/s10845-016-1206-1

-

J. Cervantes, F. Garcia-Lamont, L. Rodríguez-Mazahua, and A. Lopez, “A comprehensive survey on support vector machine classification: Applications, challenges and trends,” Neurocomputing, Vol. 408, pp. 189–215, Sep. 2020, https://doi.org/10.1016/j.neucom.2019.10.118

-

S. Gajan, “Data-driven modeling of peak rotation and tipping-over stability of rocking shallow foundations using machine learning algorithms,” Geotechnics, Vol. 2, No. 3, pp. 781–801, Sep. 2022, https://doi.org/10.3390/geotechnics2030038

-

O. Kramer, Intelligent Systems Reference Library. Berlin, Heidelberg: Springer Berlin Heidelberg, 2013, pp. 13–23, https://doi.org/10.1007/978-3-642-38652-7

About this article

The authors have not disclosed any funding.

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Gajesh G. S. Usgaonkar: formal analysis, investigation, methodology, writing – original draft preparation. Rajesh S. Prabhu Gaonkar: conceptualization, project administration, software, supervision writing- review and editing.

The authors declare that they have no conflict of interest.