Abstract

This paper presents an automated solution that combines deep learning-based computer vision with radio-frequency identification (RFID) technology to verify Vehicle Identification Numbers (VINs) during automotive production. The approach utilizes a YOLO-based object detection model for VIN localization and optical character recognition (OCR) for text extraction. An RFID tag linked to each component provides a reference VIN from the production system. A programmable logic controller (PLC) compares the AI-detected VIN with the RFID data in real time, halting the process if inconsistencies are detected. Otherwise, validated information is used to retrieve precise build instructions. This system enhances traceability, prevents assembly errors, and reduces rework, contributing to Industry 4.0 initiatives. Experimental validation confirmed the effectiveness of this integrated solution.

1. Introduction

Ensuring accurate vehicle assembly is essential for traceability and quality control in automotive manufacturing. The Vehicle Identification Number (VIN) serves as a unique identifier that links each vehicle to its specifications. Errors in identifying or tracking parts through VINs can lead to misbuilds, rework, or costly recalls, adversely affecting manufacturers’ operational efficiency and brand reputation [1].

Traditional verification methods, such as barcode scanning or manual inspection, are labor-intensive and error-prone. Manual scans do not guarantee that the verified part is actually installed, while missed scans may interrupt production [1]. Consequently, a more robust and automated system is essential for reliable part identification and VIN verification.

Technological advancements in smart sensors and RFID have opened new avenues for error-proofing [2]. Vision systems surpass traditional binary sensors, as a single camera can detect a variety of features. Furthermore, deep learning has significantly improved pattern and text recognition under variable conditions. Reading VINs, often engraved on metallic surfaces is similar to license plate recognition, and traditional OCR methods may fail in challenging environments [3]. Deep learning approaches offer enhanced resilience and accuracy.

We propose a system that integrates YOLO-based vision and OCR with RFID and PLCs. The YOLO model locates the VIN, OCR reads it, and RFID provides a reference identifier. A PLC compares both values, halting the process on mismatch and proceeding with production if matched. This integration strengthens traceability and prevents misbuilds by ensuring consistent part verification.

2. Methodology

2.1. System

Architecture The proposed system is designed for use at stations where component identity must be verified before performing critical tasks like painting or assembly. It integrates three core elements: deep learning-based computer vision, RFID-based tagging, and PLC-controlled logic.

First, the station retrieves the VIN from a reliable source, such as a work order or QR code. A camera then captures an image of the engraved VIN. The YOLO model localizes the VIN area, and an OCR engine extracts the alphanumeric code.

Concurrently, an RFID tag associated with the vehicle or pallet stores or links to the VIN. The RFID reader retrieves this information, and the PLC receives both the AI-extracted and RFID-read VINs. The system compares them to validate the component's identity.

If the VINs match, the PLC queries a centralized database to fetch vehicle-specific parameters (paint, body style, etc.), which are forwarded to robotic controllers. If they differ, the PLC halts the process and alerts the operator. This coordination improves part traceability and process reliability.

The system maintains high performance through real-time validation, persistent identification via RFID, and tight integration with existing automation infrastructure.

2.2. YOLO-based VIN recognition

To automate VIN detection, we use a custom-trained object detection pipeline based on the YOLO (You Only Look Once) model. YOLO is known for real-time inference and precision, making it suitable for industrial applications.

The model treats the VIN as a target object. It was trained on annotated images of various components bearing VINs. YOLO's single-stage detection enables fast localization of the VIN area, even when characters are affected by glare, dirt, or oblique angles.

Once the VIN region is detected, preprocessing steps, like grayscale conversion and thresholding, enhance readability. OCR, implemented using Tesseract, then reads the VIN. To minimize errors, the output undergoes validation based on VIN format rules and checksum logic.

The system operates on an edge device with GPU support. A camera, triggered by the PLC, captures the image when the vehicle reaches the station. A lightweight YOLO variant (e.g., YOLOv5s) completes inference in milliseconds, aligning with production takt times.

Testing confirmed the model's high accuracy and resilience across lighting conditions, validating its industrial viability compared to traditional OCR systems.

2.3. RFID and PLC integration

The RFID and PLC layers ensure real-time control and robust verification. Once the camera-based system reads the VIN, the RFID tag is scanned to retrieve the reference identifier.

We use passive UHF RFID tags due to their affordability, long range, and compatibility with industrial environments. The tag may carry the VIN or an ID linked to the VIN in the Manufacturing Execution System (MES).

The RFID tag remains with the vehicle throughout production, enabling real-time identification and traceability. Communication between the vision system and PLC occurs via OPC UA, while RFID data is transmitted using PROFINET. Comparison logic is implemented as string-matching within the PLC code. On mismatch, output coils activate alarms.

This integration ensures high-speed, reliable part verification with minimal latency, suitable for high-throughput manufacturing.

2.4. Flowchart of Android + YOLO + RFID workflow

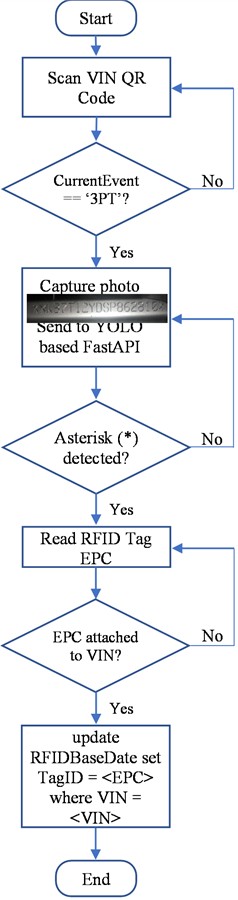

The process begins with scanning a VIN QR code, querying the currentEvent from the Base table. If the event is '3PT', a photo is captured and sent to a YOLO-based FastAPI service. If an asterisk (*) is detected, the system proceeds to RFID tag reading. If the tag EPC does not already exist in the RFIDBaseData table, it is inserted by updating the TagID column. If not, the system loops to wait for a new RFID tag.

Fig. 1Flowchart depicting the integrated workflow

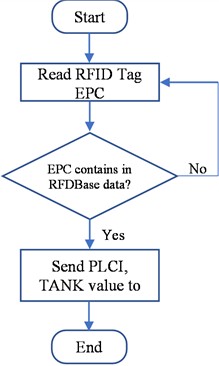

Fig. 2RFID-PLC communication workflow

2.5. Database and TCP-IP Integration with Fixed RFID Reader

This section outlines the mechanism through which fixed RFID readers interact with the backend database and programmable logic controllers (PLCs) to facilitate automated part verification. The objective is to ensure accurate transmission of tag information and retrieval of associated process parameters in real-time.

The logic is illustrated in Fig. 2, which depicts a flowchart of the RFID-PLC communication workflow. If the EPC is not present in the database, no action is taken, and the system awaits valid input. Conversely, if a match is found, the associated parameters are transmitted instantly, ensuring minimal delay and continuous process flow.

This architecture enables real-time synchronization between component identification and control logic execution. It ensures that only verified components are processed, aligning with the system’s broader error-proofing goals.

2.6. Schematic diagram of the RFID base data table

The RFID system uses a relational database to track vehicle components across the production line. Its core table, RFIDBaseData, maps RFID tags to relevant process and vehicle data.

Fig. 3Structure of RFIDBaseData used to store tag and process information including BaseID foreign key to Base, and columns for PVI, VIN, TagID, PLCI, and TANK

3. Results and discussion

A prototype was developed and tested in a controlled environment to simulate real-world conditions. It included YOLO-based vision, RFID tracking, and PLC coordination. The prototype was evaluated on accuracy, speed, and reliability.

The YOLO model was trained using 500 images and tested on 100 images from chassis and engine components. It achieved 100 % detection accuracy for VIN localization. OCR was applied to 1,700 characters (100 VINs), achieving 98.5 % recognition accuracy. No full VIN was misread; errors at the character level were corrected using validation rules. This is comparable to leading license plate recognition systems with 98-99 % accuracy [4]. To support this claim, we calculated a 95 % confidence interval for a binomial proportion, indicating a range from approximately 97.3 % to 99.3 %. A confusion matrix summary is provided below to illustrate classification performance.

Table 1Classification performance

Predicted: correct | Predicted: incorrect | Total | |

Actual | 1675 | 25 | 1700 |

Precision | 1675 / (1675+5) = 0.997 | ||

Recall | 1675 / 1700 = 0.985 | ||

F1-Score | 2 × (0.997 × 0.985) / (0.997 + 0.985) ≈ 0.991 |

RFID performance was tested with 10 tagged vehicle frames. Tags were read within 2 meters with latency under 100 ms, ensuring no production delays. Two VIN mismatches were deliberately introduced. In both cases, the PLC halted the process within one second, before the vehicle entered the next station.

This rapid response confirms the system’s effectiveness for inline error-proofing. Manual scanning systems are slower, depend on operator accuracy, and are less reliable under adverse conditions. The automated system reduces these risks by ensuring first-time quality and reducing labor dependency.

It also aligns with poka-yoke principles by enforcing VIN verification before critical steps. This minimizes the Cost of Poor Quality (COPQ), including scrap, rework, and recalls. Avoiding even a few misbuilds justifies implementation costs.

RFID tags enable persistent tracking, building a complete genealogy for each vehicle. This allows selective containment during quality investigations, avoiding full-batch recalls.

Though tested at a painting station, the system is adaptable. It could verify engine installation, software updates, or part alignment using the same camera infrastructure. Future upgrades may store in-progress data on RFID tags, creating decentralized tracking systems.

Initial issues with image quality were resolved using synchronized camera triggering and localized LED lighting. RFID performance was optimized by strategic antenna placement to minimize metal interference.

Overall, the system demonstrated its scalability, robustness, and value for modern automotive production.

4. Conclusions

This paper introduces a smart VIN verification system integrating deep learning, RFID, and PLC control for automotive error-proofing. It addresses the need for reliable component identification before critical manufacturing steps.

By leveraging YOLO-based object detection and OCR, the system effectively reads VINs under challenging conditions. Cross-verification with RFID data ensures that only validated components proceed in the production line. Real-time PLC decisions further enable automation and traceability.

Tests showed over 98 % recognition accuracy and prompt detection of mismatches, preventing production errors. RFID enabled fast, contactless identification, supporting continuous tracking. These features enhance build reliability, reduce manual workload, and align with Industry 4.0 goals.

Future work includes full-line deployment, long-term performance analysis, integration with MES dashboards, and extended AI inspections. The system offers a scalable foundation for smart manufacturing.

References

-

F. Buchner, “Automating the part identification method of automotive assembly lines through RFID technology,” M.S. Thesis, KTH Royal Institute of Technology, KTH Royal Institute of Technology, Stockholm, Sweden, 2022.

-

T. Rosenburg, “Error-Proofing with Sensors and RFID,” Assembly Magazine, Dec. 2015.

-

H. Liu et al., “Vehicle VIN recognition based on deep learning and OCR,” in Proceedings of the 2024 International Conference on Science and Engineering of Electronics (ICSEE 2024), Nov. 2024.

-

H. Moussaoui et al., “Enhancing automated vehicle identification by integrating YOLO v8 and OCR techniques for high-precision license plate detection and recognition,” Scientific Reports, Vol. 14, No. 1, Jun. 2024, https://doi.org/10.1038/s41598-024-65272-1

-

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: unified, real-time object detection,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788, Jun. 2016, https://doi.org/10.1109/cvpr.2016.91

About this article

The authors have not disclosed any funding.

The authors express their sincere gratitude to the Automotive Research Laboratory of Tashkent State University of Transport and the Department of Electronics and Instrumentation at Ferghana State Technical University. Their technical infrastructure, expert feedback, and collaborative environment were instrumental in the successful development and testing of the prototype. The project also benefited from internal discussions with faculty and graduate students specializing in automotive mechatronics.

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

The authors declare that they have no conflict of interest.